I’ve got 3 physical nodes, two HP ProLiant 320e Gen8v2 and a HP MicroServer Gen8 with two Gbit NICs and an ILO interface.

One of the NICs is connected to the external network. The other one and the ILO are connected to an internal switch using a NAT gateway to connect to the external net (this is neccessary for installing updates, syncing time and accessing the management interface at all from outside).

Network configuration (/etc/network/interfaces) looks like this on all nodes:

auto lo

iface lo inet loopback

# the internal interface, used for storage traffic,

# cluster traffic and internal inter VM communication too.

# this should really be split into VLANs and using

# a bond connected to two different physical switches *at least*

# having an extra (10G) interface for storage would be a plus

auto eth0

iface eth0 inet manual

# bridge to allow VMs to be connected to the physical interface

auto vmbr0

iface vmbr0 inet static

address 10.10.67.10

netmask 255.255.255.0

gateway 10.10.67.1

bridge_ports eth0

bridge_stp on

bridge_fd 0

# the external interface connected to the outer world

# this has no IP here, as the host is not accessible from

# the outside directly, but needs access itself, see gateway above

auto eth1

iface eth1 inet manual

# bridge to allow VMs to be connected to the physical interface

auto vmbr1

iface vmbr1 inet manual

bridge_ports eth1

bridge_stp on

bridge_fd 0

Each of the ProLiant servers has two 250GB SSDs and two 1TB SATA drives, configured as follows:

Array 1: 2x250GB SSD

– Volume 1: 2x65GB RAID1 (root filesystem and swap)

– Volume 2: 2x185GB RAID0 (Ceph cache OSD)

Array 2: 1x1TB SATA

– Volume 3: 1x1TB RAID0 (Ceph storage OSD)

Array 3: 1x1TB SATA

– Volume4: 1x1TB RAID0 (Ceph storage OSD)

The MicroServer has four 2TB SATA drives, configured in a similar fassion:

Array 1: 2x2TB SATA

– Volume 1: 2x130GB RAID1 (root filesystem and swap)

– Volume 2: 2x1TB RAID0 (Ceph storage OSD)

– Volume 3: 2x 870GB RAID1 (Backup stroage, softRAID0, NFS export)

Array 2: 2x2TB SATA

– Volume 4: 2x1TB RAID0 (Ceph storage OSD)

– Volume 5: 2x 1TB RAID1 (Backup storage, softRAID0, NFS export)

Now install the three nodes , I choose 32GB root, 32GB swap, entered the corresponding, local, IP address and gateway as shown (will overwrite the configuration anyways with the file above), local hostname, password and admin email address and be done!

Login to the new installed nodes to setup the network, install some additional software, if needed, and ceph:

root@nodeXX:~#

aptitude update

aptitude dist-upgrade

aptitude install mc openntpd ipmitool

pveceph install -version hammer

mcedit /etc/network/interfaces

# copy&paste action here

reboot

After this has been done on all nodes, we install the cluster and initial ceph configuration.

On the first node do:

root@node01:~#

pvecm create cluster1

pveceph init --network 10.10.67.0/24

pveceph createmon

On all the other nodes:

root@nodeXX:~#

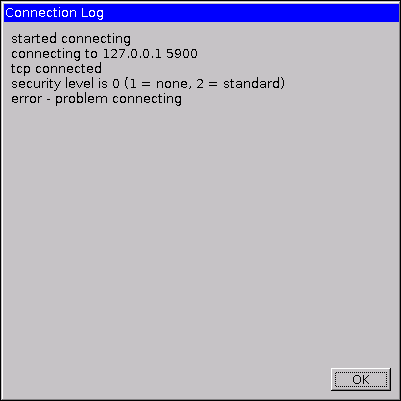

pvecm add 10.10.67.10 # this is the IP of the first node

pveceph createmon

Check the status of the cluster and ceph, additionally check that /etc/hosts contains all nodes names – on every node, if not add them now:

pvecm status # show all nodes

ceph status # show all monitors

cat /ets/hosts # should show all the nodes names, FQDN too

10.10.67.10 node01.local node01 pvelocalhost

10.10.67.20 node02.local node02

10.10.67.30 node03.local node03